Appendix G NAEP 2022 Sample Design

NAEP 2026 Appendix G v.36.docx

National Assessment of Educational Progress (NAEP) 2026

Appendix G NAEP 2022 Sample Design

OMB: 1850-0928

NATIONAL CENTER FOR EDUCATION STATISTICS

NATIONAL ASSESSMENT OF EDUCATIONAL PROGRESS

National Assessment of Educational Progress (NAEP) 2026

Appendix G

NAEP 2022 Sample Design

OMB# 1850-0928 v.36

May 2025

The 2022 Sample Design documentation is the most current version available to the public for state level NAEP assessment year. At this time, there is not a timeline for when the details for later assessment years will be publicly available.

NAEP Technical Documentation Website

NAEP Technical Documentation NAEP 2022 Sample Design

The sample design for NAEP 2022 included samples for various operational assessments. Representative samples were drawn for the following operational assessments:

![]() long-term trend

(LTT) age

9 and

age 13

national assessments

in mathematics

and reading

in public

and private

schools;

long-term trend

(LTT) age

9 and

age 13

national assessments

in mathematics

and reading

in public

and private

schools;

![]() national assessments in civics and U.S. history in public and

private schools at grade 8;

national assessments in civics and U.S. history in public and

private schools at grade 8;

![]() national assessments in mathematics and reading in private

schools at grades 4 and 8; and

national assessments in mathematics and reading in private

schools at grades 4 and 8; and

![]() state-by-state

assessments and

Trial Urban

District Assessments

(TUDA) in

mathematics and

reading in

public schools

at grades 4 and 8.

state-by-state

assessments and

Trial Urban

District Assessments

(TUDA) in

mathematics and

reading in

public schools

at grades 4 and 8.

The samples

for the

operational assessments

were organized

into eight

distinct groupings

and sampled

separately as

follows:

![]() mathematics and reading assessments in public schools at

grades 4 and 8;

mathematics and reading assessments in public schools at

grades 4 and 8;

![]() mathematics and

reading assessments

in private

schools at

grades 4

and 8;

mathematics and

reading assessments

in private

schools at

grades 4

and 8;

![]() civics and U.S. history assessments in public schools at

grade 8;

civics and U.S. history assessments in public schools at

grade 8;

![]() civics and U.S. history assessments in private schools at

grade 8;

civics and U.S. history assessments in private schools at

grade 8;

![]() mathematics and

reading LTT

assessments in

public schools

at age

9;

mathematics and

reading LTT

assessments in

public schools

at age

9;

![]() mathematics and

reading LTT

assessments in

private schools

at age

9;

mathematics and

reading LTT

assessments in

private schools

at age

9;

![]() mathematics and

reading LTT

assessments in

public schools

at age

13; and

mathematics and

reading LTT

assessments in

public schools

at age

13; and

![]() mathematics and reading LTT assessments in private schools at

age 13.

mathematics and reading LTT assessments in private schools at

age 13.

2022 State Assessment Sample Design

2022 National Grade-Based Assessment Sample Design

2022 National Long-Term Trend Assessment Sample Design

The grade 4 and grade 8 assessments were all digitally based assessments (DBA) administered using tablets. The LTT assessments were paper-based assessments (PBA) administered using paper and pencil. LTT age 9 was administered in the winter of 2022 and LTT age 13 was administered in the fall of 2022 (in a different school year than age 9).

The national assessments were designed to achieve nationally representative samples of public and private school students in the fourth or eighth grades (or public and private school students who were age 9 or 13 in the case of LTT). The target populations included all students in public, private, Bureau of Indian Education (BIE), and Department of Defense Education Activity (DoDEA) schools who were enrolled in grades 4 or 8 (or who were age 9 or 13 in the case of LTT) at the time of assessment. DoDEA schools for LTT and the grade 8 civics and U.S. history assessments were limited to those located in the U.S. (not overseas).

For the fourth- and eighth-grade mathematics and reading assessments in public schools, the TUDA samples formed part of the corresponding state public school samples, and the state samples formed the public school grades 4 and 8 part of the national sample. Nationally representative samples were drawn for civics and U.S. history and for the remaining populations of private school students, DoDEA students, and BIE students separately by grade.

The state assessments were designed to achieve representative samples of students in the respective grade. At grades 4 and 8, the target populations included all students in each participating jurisdiction, which included states, District of Columbia, BIE, DoDEA, and school districts chosen for the TUDA. For each grade and assessment

subject, samples were designed to produce aggregate estimates with adequate precision for all the participating jurisdictions, as well as estimates for various student subpopulations of interest.

A one-time feature of some of the 2022 samples was maximum overlap with other earlier NAEP samples. This was done to facilitate certain analyses related to the fact that 2022 was the first assessment year after the COVID-19 pandemic. The state samples were selected to have maximum overlap with the school samples for the NAEP 2021 Monthly School Survey and the NAEP 2021 School and Teacher Questionnaire Study. The LTT samples were selected to have maximum overlap with the NAEP 2020 LTT age 9 and age 13 school samples, respectively. This overlap control was achieved for these samples by using an adaptation of the Keyfitz process.

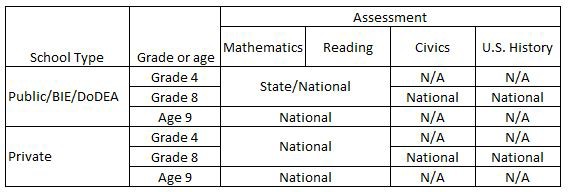

The figure below illustrates the various sample types and subjects.

Components of the NAEP samples, by assessment subject, grade or age, and school type: 2022

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 Assessments.

http://nces.ed.gov/nationsreportcard/tdw/sample_design/2022/naep_2022_sample_design.aspx

NAEP Technical Documentation Sample Design for the 2022 National Grade-Based Assessment

The 2022 national assessment included operational assessments in mathematics and reading in public and private schools at grades 4 and 8, and operational assessments in civics and U.S. history in public and private schools at grade 8.

The sample designs aimed to achieve nationally representative samples of students in the defined populations who were enrolled at the time of assessment.

The samples were based on a two-stage sample design:

4th and 8th Grade Public School National Mathematics and Reading Assessment

4th and 8th Grade Private School National Mathematics and Reading Assessment

![]() selection of

schools within

strata; and

selection of

schools within

strata; and

![]() selection of students within schools.

selection of students within schools.

The samples of schools were selected with probability proportional to a measure of size based on the estimated grade-specific enrollment in the schools.

For fourth- and eighth-grade public schools, the aggregate of the NAEP state student samples and assessments in mathematics and reading constitute the corresponding NAEP national student samples and assessments.

The samples

for the

remaining national

assessments were

organized into

three distinct

groupings and

selected separately:

![]() mathematics and reading assessments in private schools at

grades 4 and 8;

mathematics and reading assessments in private schools at

grades 4 and 8;

![]() civics and

U.S. history

assessments in

public schools

at grade

8; and

civics and

U.S. history

assessments in

public schools

at grade

8; and

![]() civics and U.S. history assessments in private schools at

grade 8.

civics and U.S. history assessments in private schools at

grade 8.

All of the grade 4 and grade 8 assessments were digitally based assessments (DBA) administered using tablets.

8th Grade Public School National Civics and U.S. History Assessment

8th Grade Private School National Civics and U.S. History Assessment

NAEP Technical Documentation 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The eighth-grade private school samples for the national assessments in civics and U.S. history were designed to produce nationally representative samples of students enrolled in grade eight in private schools in the United States. The target sample sizes of assessed students for the grade eight private school sample was 1,600 (800 per subject). Prior to sampling, the target sample sizes were adjusted upward to offset expected school and student attrition due to nonresponse and ineligibility.

Samples were selected using a two-stage probability-based design that involved selection of schools from within strata and selection of students within schools. The first-stage sample of schools was selected with probability proportional to a measure of size based on estimated grade-specific enrollment in the schools.

The sampling of students at the second-stage involved two steps: (1) sampling of students in the targeted grade (eighth) from each sampled school, (2) assignment of assessment subject (civics or U.S. history) to the sampled students.

Target Population Sampling Frame Stratification of Schools School Sample Selection Substitute Schools Ineligible Schools Student Sample Selection

School and Student Participation

NAEP Technical Documentation Ineligible Schools for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The Private School Universe Survey (PSS)-based sampling frame school file, from which most of the sampled schools were drawn, corresponds to the 2019–2020 school year, two years prior to the assessment school year. During the intervening period, some of these schools either closed, no longer offered the grade of interest, or were ineligible for other reasons. In such cases, the sampled schools were coded as ineligible.

Total and Eligible Schools Sampled

Eligibility Status of Schools Sampled

NAEP Technical Documentation Eligibility Status of Schools Sampled for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The following table shows the unweighted counts and percentages of sampled schools that were eligible and ineligible, by reason for ineligibility, for the eighth-grade private school sample for the national assessments in civics and U.S. history.

Sampled private schools, eighth-grade national assessment, by eligibility status: 2022

-

Eligibility status

Unweighted count of schools

Unweighted percentage

All sampled private schools

170

100.00

Eligible

130

79.29

Ineligible

35

20.71

Has sampled grade, but no eligible students

2

1.18

Does not have sampled grade

8

4.73

Closed

7

4.14

Not a regular school

17

10.06

Duplicate on sampling frame

0

0.00

Other ineligible school

1

0.59

NOTE: Numbers of schools are rounded to nearest ten, except those pertaining to ineligible schools. Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Total and Eligible Sampled Schools for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The following table presents unweighted counts and percentages of ineligible and eligible schools by private school affiliation in the eighth-grade private school sample for the national assessments in civics and U.S. history. Schools whose private school affiliation was unknown at the time of sampling subsequently had their affiliation determined during data collection. Therefore, such schools are not broken out separately and not included in the following table.

Eligibility status of sampled private schools, eighth-grade national assessment, by private school type: 2022

-

Private school type

Eligibility status

Unweighted count

Unweighted percentage

All private

Total

140

100.00

Ineligible

20

14.29

Eligible

120

85.71

Roman Catholic

Total

40

100.00

Ineligible

0

0.22

Eligible

40

100.00

Other private

Total

110

100.00

Ineligible

20

18.18

Eligible

90

81.82

NOTE: Numbers of schools are rounded to nearest ten. Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Sampling Frame for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The primary sampling frame for the 2022 eighth-grade private school sample for the national assessments in civics and U.S. history was developed from the Private School Universe Survey (PSS) corresponding to the 2019–2020 school year. The PSS file is the Department of Education’s primary database of elementary and secondary private schools in the 50 states and the District of Columbia, and it is based on a survey conducted by the U.S. Census Bureau during the 2019–2020 school year.

This sampling frame is referred to as the PSS-based sampling frame.

Nonrespondents to the PSS were also included in the primary sampling frame. Since these schools did not respond to the

Eighth-Grade Schools and Enrollment New-School Sampling Frame

PSS, their private school affiliation are unknown. Because NAEP response rates differ vastly by affiliation, to better estimate the target sample size of schools for each affiliation, additional work was done to obtain affiliation for these PSS nonrespondents. If a nonresponding school responded to a previous PSS (either two or four years prior), affiliation was obtained from the previous response. For those schools that were nonrespondents for the last two cycles of the PSS, in some cases internet research was used to establish affiliation. There were still schools with unknown affiliation remaining after this process.

A secondary sampling frame was also created for this sample to account for schools that newly opened or became newly eligible between the 2019–2020 and 2021–2022 school years. This frame contains brand-new and newly-eligible eighth-grade schools and is referred to as the new-school sampling frame. Because there are no sources available to identify new schools for non-Catholic private schools, the new-school frame for private schools contains only Catholic schools.

Both sets of sampling frames excluded schools that were ungraded, provided only special education, were part of hospital or treatment center programs, were juvenile correctional institutions, were home-school entities, or were for adult education.

NAEP Technical Documentation Eighth-Grade Schools and Enrollment in the 2022 Private School Civics and U.S. History Sampling Frame

The following table presents the numbers of eighth-grade private schools and estimated enrollments, as contained in the Private School Universe Survey (PSS)-based sampling frame, by private school affiliation, for the national assessments in civics and U.S. history.

The counts presented below are of schools with known affiliation. Schools with unknown affiliation do not appear in the table because their grade span, affiliation, and enrollment were unknown. Although PSS is a school universe survey, participation is voluntary and not all private schools respond. Since the NAEP sample must represent all private schools, not just PSS respondents, a small sample of PSS nonrespondents with unknown affiliation was selected to improve NAEP coverage.

Number of schools and enrollment in eighth-grade private school sampling frame, national assessment, by affiliation: 2022

-

Affiliation

Number of schools

Estimated enrollment

Total

16,808

314,627

Catholic

4,426

123,133

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

Affiliation Number of schools Estimated enrollment

-

Non-Catholic

12,382

191,494

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation New-School Sampling Frame for the 2022 Eighth- Grade Private School National Assessment in Civics and U.S. History

The NAEP 2022 private school frame was constructed using the most current Private School Universe Survey (PSS) file available from NCES. This file contained schools that were in existence during the 2019–2020 school year (i.e., it was two years out of date). During the subsequent 2-year period, undoubtedly, some schools closed, some changed structure (one school becoming two schools, for example), some newly opened, and still others changed their grade span.

A supplemental sample was selected from a list of Catholic schools that were new or had become newly eligible sometime after the 2019–2020 school year. The goal was to allow every new Catholic school a chance of selection, thereby fully covering the target population of Catholic schools in operation during the 2021–2022 school year. It was infeasible to ask every Catholic diocese in the United States to provide a supplemental school frame, so a two-stage procedure was employed. First, a sample of dioceses was selected. Then the National Catholic Educational Association (NCEA) was sent a list of the schools within their sampled dioceses that had been present on the 2019–2020 PSS file. NCEA was asked to add in any new schools and update grade span for the schools on this list.

The new-school process began with the preparation of a diocese-level frame. The starting point was a file containing every Catholic diocese in the United States classified as small, medium, or large based on the number of schools and student enrollment of schools from the PSS private school frame.

A diocese was considered to be small if it contained no more than one school at each targeted grade (4 and 8). During school recruitment, schools sampled from small dioceses were asked to identify schools within their dioceses that newly offered the targeted grade. Every identified new school was added to the sample. From a sampling perspective, the new school was viewed as an "annex" to the sampled school, which meant that it had a well-defined probability of selection equal to that of the sampled school. When

a school in a small diocese was sampled from the PSS frame, its associated new school was automatically sampled as well.

Dioceses that were not small were further divided into two strata, one containing large-size dioceses and a second containing medium-size dioceses. These strata were defined by computing the percentage of grade 4 and 8 enrollment represented by each diocese, sorting in descending order, and cumulating the percentages. All dioceses up to and including the first diocese at or above the 80th cumulative percentage were defined as large dioceses. The remaining dioceses were defined as medium dioceses.

A simplified example is given below. The dioceses are ordered by descending percentage enrollment. The first six become large dioceses and the last six become medium dioceses.

Example showing assignment of Catholic dioceses to the large-size and medium-size diocese strata, private school grade 8 national assessment: 2022

-

Diocese

Percentage enrollment

Cumulative percentage enrollment

Stratum

Diocese 1

20

20

L

Diocese Percentage enrollment Cumulative percentage enrollment Stratum

-

Diocese 2

20

40

L

Diocese 3

15

55

L

Diocese 4

10

65

L

Diocese 5

10

75

L

Diocese 6

10

85

L

Diocese 7

5

90

M

Diocese 8

2

92

M

Diocese 9

2

94

M

Diocese 10

2

96

M

Diocese 11

2

98

M

Diocese 12

2

100

M

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

In actuality, there were 77 large and 96 medium dioceses in the sampling frame.

The target sample size was 10 dioceses total across the medium and large diocese strata: eight dioceses from the large-size diocese stratum and two from the medium-size diocese stratum.

In the medium-size diocese stratum, dioceses were selected with equal probability. In the large-size diocese stratum, dioceses were sampled with probability proportional to enrollment. These probabilities were retained and used in later stages of sampling and weighting of new schools.

NCEA was sent a listing of all the schools in the selected dioceses that appeared on the 2019–2020 PSS file and was asked to provide information about the new schools not included in the file and grade span changes of existing schools. These listings were used as sampling frames for selection of new Catholic schools and updates of existing schools.

The following table presents the number and percentage of schools and average estimated grade enrollment for the eighth-grade new-school frame by census region. There were no new schools in the Midwest region.

Eighth-grade new school frame for the private school national assessment: number and percentage of schools and estimated enrollment by census region: 2022

-

Census region

Schools

Percentage

Mean school size

Total

16

100.00

35

Northeast

11

68.75

39

Midwest

0

0.00

0

South

3

18.75

15

West

2

12.50

39

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Grade 8 Civics and U.S. History Assessments.

NAEP Technical Documentation School and Student Participation in the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The tables linked to the right present weighted school and student participation rates, student exclusion rates, and student full-time remote rates for the eighth-grade private school national civics and U.S. history samples.

A weighted school participation rate indicates the percentage of the student population that is directly represented by the participating school sample.

A weighted student participation rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools.

A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. Students are generally excluded from a NAEP assessment if they have a disability or limited English language proficiency that prevents them from taking the assessment altogether or the accommodations they require to take the assessment were unavailable.

A weighted full-time remote rate indicates the percentage of the student population that is full-time remote.

Weighted School Response Rates

Weighted Student Response and Exclusion Rates for the Civics Assessment

Weighted Student Response and Exclusion Rates for the

U.S. History Assessment

Weighted school participation rates are calculated by dividing the sum of school base weights, weighted by student enrollment of the targeted grade, for all participating schools by the sum of the base weights, weighted by student enrollment of the target grade, for all eligible schools. Eligible schools are all sampled schools except those considered out- of-scope. The base weight is assigned to all sampled schools and is the inverse of the probability of selection. The weighted school participation rates in these tables reflect participation prior to substitution. That is, participating substitute schools that took the place of refusing originally sampled schools are not included in the numerator.

Weighted student participation rates are calculated by dividing the sum of the student base weights for all assessed students by the sum of the student base weights for all assessable students. (See below for the response dispositions of NAEP sampled students.) Students deemed assessable are those who were assessed or absent. They do not include students that were not eligible (primarily made up of withdrawn or graduated students) or students with disabilities (SD) or English learners (EL) who were excluded from the assessment.

Weighted student exclusion rates are calculated by dividing the sum of the school nonresponse-adjusted student base weights for all excluded students by the sum for all assessable and excluded students.

Weighted student full-time remote rates are calculated by dividing the sum of the school nonresponse-adjusted student base weights for all full-time remote students by the sum for all assessable, excluded, and full-time remote students.

Every student

sampled for

NAEP is

classified into

one of

the following

response disposition

categories:

![]() Assessed

Assessed

![]() Absent

Absent

![]() Excluded (must

be SD,

EL, or

SD and

EL)

Excluded (must

be SD,

EL, or

SD and

EL)

![]() Withdrawn or Graduated (ineligible)

Withdrawn or Graduated (ineligible)

![]() Full-time remote

Full-time remote

Assessed students were students that completed an assessment.

Absent students were students who were eligible to take an assessment but were absent from the initial session and the makeup session if one was offered. (Note, some schools, not all, had make-up sessions for students who were absent from the initial session.)

Excluded students were determined by their school to be unable to meaningfully take the NAEP assessment in their assigned subject, even with an accommodation. Excluded students must also be classified as SD and/or EL.

Withdrawn or graduated students are those who have left the school before the original assessment. These students are considered ineligible for NAEP. Full-time remote students are enrolled in brick-and-mortar schools but do not attend school in person. They are considered not assessable for NAEP.

NAEP Technical Documentation Weighted School Response Rates for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The following table presents unweighted counts of eligible sampled and participating schools and weighted school response rates, by school type, for the eighth-grade private school national civics and U.S. history samples.

A weighted school response rate indicates the percentage of the student population that is directly represented by the participating school sample. These response rates are based on the original sample of schools (excluding substitutes).

Eligible and participating school counts and weighted school response rates for eighth-grade private schools, national civics and U.S. history assessments, by school type: 2022

-

School type

Number of eligible sampled schools

Number of participating schools

Weighted school response rate (percent)

All private

130

50

33.59

Catholic

40

30

61.74

Non-Catholic

100

20

15.03

NOTE: Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Weighted Student Response and Exclusion Rates for the 2022 Eighth-Grade Private School National Civics Assessment

The following table presents weighted student response, exclusion, and full-time remote rates, by school type, for eighth-grade private school students in the national civics sample. Separate exclusion rates are provided for students with disabilities (SD) and English learners (EL).

A weighted student response rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools. A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. The weighted student full-time remote rate indicates the percentage of the student population that is full-time remote (enrolled in brick-and-mortar schools but do not attend school in person).

Weighted student response, exclusion, and full-time remote rates for eighth-grade private schools, national civics assessment, by school type: 2022

-

School type

Weighted student response

rate (percent)

Weighted percentage of all students who were SD and excluded

Weighted percentage of all students who were EL and excluded

Weighted student full-time remote rates (percent)

All private

92.30

#

#

0.25

Catholic

91.89

#

#

#

Non-Catholic

93.55

#

#

0.41

# Rounds to zero.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 Civics Assessment.

NAEP Technical Documentation Weighted Student Response and Exclusion Rates for the 2022 Eighth-Grade Private School National U.S. History Assessment

The following table presents weighted student response, exclusion, and full-time remote rates, by school type, for eighth-grade private school students in the national U.S. history sample. Separate exclusion rates are provided for students with disabilities (SD) and English learners (EL).

A weighted student response rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools. A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. The weighted student full-time remote rate indicates the percentage of the student population that is full-time remote (enrolled in brick-and-mortar schools but do not attend school in person).

Weighted student response, exclusion, and full-time remote rates for eighth-grade private schools, national U.S. history assessment, by school type: 2022

-

School type

Weighted student response

rate (percent)

Weighted percentage of all students who were SD and excluded

Weighted percentage of all students who were EL and excluded

Weighted student full-time remote rates (percent)

All private

93.57

#

#

0.48

Catholic

94.26

#

#

#

Non-Catholic

91.51

#

#

0.80

# Rounds to zero.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 U.S. History Assessment.

NAEP Technical Documentation School Sample Selection for the 2022 Eighth- Grade Private School National Assessment in Civics and U.S. History

The sampled schools for the eighth-grade private school national assessments in civics and U.S. history came from two frames: the primary private school sample frame constructed from the Private School Universe Survey (PSS) file and the supplemental new-school sampling frame. Schools were sampled from each school frame with probability proportional to size using systematic sampling. Prior to sampling, schools in each frame were sorted by the appropriate implicit stratification variables in a serpentine order within each explicit sampling stratum. (For details on explicit and implicit strata used for these samples see the stratification page.) A school's measure of size was a complex function of the school's estimated grade enrollment. Only one hit was allowed for each school.

Schools from the PSS-based frame were sampled at a rate that would yield a national sample of 1,600 assessed students (800 each from the

Computation of Measures of Size

School Sample Sizes: Frame and New School

Catholic and non-Catholic school strata across both subjects). Catholic schools from the new-school frames were sampled at the same rate as those from the PSS-based frame.

NAEP Technical Documentation Computation of Measures of Size for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

In designing the eighth-grade private school civics and U.S. history assessment samples, five objectives underlie the process of determining the probability of selection for each school and the number of students to be sampled from each selected school:

![]() to meet

the target

student sample

size for

each grade;

to meet

the target

student sample

size for

each grade;

![]() to select an equal-probability sample of students;

to select an equal-probability sample of students;

![]() to limit the number of students selected from any one school;

to limit the number of students selected from any one school;

![]() to ensure

that the

sample within

a school

does not

include a

very high

percentage of

the students

in the

school, unless

all students

are included;

and

to ensure

that the

sample within

a school

does not

include a

very high

percentage of

the students

in the

school, unless

all students

are included;

and

![]() to reduce the sampling rate of small schools, in recognition

of the greater cost and burden per student of conducting assessments

in such schools.

to reduce the sampling rate of small schools, in recognition

of the greater cost and burden per student of conducting assessments

in such schools.

The goal in determining the school's measure of size is to optimize across the last four objectives in terms of maintaining the precision of estimates and the cost effectiveness of the sample design.

Therefore, to meet the target student sample size objective and achieve a reasonable compromise among the next other objectives, the following algorithm was used to assign a measure of size to each school based on its estimated grade enrollment as indicated on the sampling frame.

In the formula below, `x_{js}` is the estimated grade enrollment for stratum `j` and school `s`, `y_{j}` is the target within-school student sample size for stratum `j`, and

`z_{js}` is the within-school take-all student cutoff for stratum `j` to which school `s` belongs, and `P_{s}` is a primary sampling unit (PSU) weight associated with the private school universe (PSS) area sample.

For grade 8, the within-school target sample size (`y_{j}`) was 50 and take-all cutoff was 52. The preliminary measure of size (MOS) was calculated as follows:

\begin{equation} MOS_{js} =

P_{s} \times \left\{ \begin{array}{l} x_{js} & \text{if } z_{j} < x_{js} \\[2pt]

y_{j} & \text{if } 20 < x_{js} \leq{z_{j}} \\[2pt]

\left(\dfrac{y_j}{20}\right) \times x_{js} & \text{if } 5 < x_{js} \leq {20} \\

\dfrac{y_j}{4} & x_{js} \leq {5} \end{array}\right.

\end{equation}

The preliminary school measure of size was rescaled to create an expected number of hits by applying a multiplicative constant `b_{j}`, which varies by school type. It follows that the final measure of size, `E_{js}`, was defined as: \begin{equation}

E_{js}=min(b_{j}\times MOS_{js}, u_{j}),

\end{equation} where `u_{j}` is the maximum number of hits allowed. For the 2022 private schools sample, the limit was one hit.

One can choose a value of `b_{j}` such that the expected overall student sample yield matches the desired targets specified by the design, where the expected yield is calculated by summing the product of an individual school’s probability and its student sample yield across all schools in the frame.

The school's probability of selection `pi_{js}` was given by: \begin{equation}

\pi_{js}=min(E_{js},1).

\end{equation}

In addition, new and newly-eligible Catholic schools were sampled from the new-school frame. The assigned measures of size for these schools, \begin{equation} E_{js}=min(b_{j}\times MOS_{js}\times \pi_{djs}^{-1} , u_{j}),

\end{equation} used the `b_{j}` and `u_{j}` values from the main school sample for the grade and school type (i.e., the same sampling rates as for the main school sample). The variable `pi_{djs}` is the probability of selection of the diocese into the new-school diocese `d` sample.

In addition, an adjustment was made to the initial measures of size in an attempt to reduce school burden by minimizing the number of schools selected for the NAEP 2022 national civics and U.S. history assessment and the NAEP 2022 national reading and mathematics assessment in private schools. The NAEP sampling procedures used an adaptation of the Keyfitz process to compute conditional measures of size that, by design, minimized the overlap of schools selected for both assessments.

NAEP Technical Documentation School Sample Sizes: PSS-Based and New-School Sampling Frames for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The following table presents the number of schools selected for the eighth-grade private school sample by sampling frame (Private School Universe Survey [PSS]-based and new-school) and private school affiliation.

Number of schools in the total, PSS-based and new-school samples, grade 8 private national assessment, by school type: 2022

-

School type

Total school sample

PSS-based school sample

New-school sample

All private

170

170

#

Catholic

40

40

#

Non-Catholic private

110

110

†

Unknown affiliation

30

30

†

# Rounds to zero.

† Not applicable.

NOTE: Numbers of schools are rounded to nearest ten. Detail may not sum to totals due to rounding.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Stratification of Schools for the 2022 Eighth- Grade Private School National Assessment in Civics and U.S. History

The purpose of school stratification is to increase the efficiency and ensure the representativeness of school samples in terms of important school-level characteristics, such as geography (e.g., census region), urbanicity, and race/ethnicity composition. NAEP school sampling utilizes two types of stratification: explicit and implicit.

Explicit stratification partitions the sampling frame into mutually exclusive groupings called strata. The systematic samples selected from these strata are independent, meaning that each sample is selected with its own unique random start. Implicit stratification involves sorting the sampling frame, as opposed to grouping the frame. For NAEP, schools are sorted in serpentine fashion by key school characteristics within sampling strata and sampled systematically using this ordering. This type of stratification ensures the representativeness of the school samples with respect to the key school characteristics.

Explicit stratification for the NAEP 2022 private school samples was by private school type: Catholic, non-Catholic, and unknown affiliation. Private school affiliation was unknown for nonrespondents to the NCES Private School Universe Survey (PSS) for the past three cycles.

The implicit stratification of the schools involved four dimensions. Within each explicit stratum, the private schools were hierarchically sorted by census region, urbanicity status, race/ethnicity status, and estimated grade enrollment. The implicit stratification in this four-fold hierarchical stratification was achieved via a "serpentine sort".

Census region was used as the first level of implicit stratification for the NAEP 2022 private school sample. For Catholic and non-Catholic schools, all four census regions were used as strata. For schools with unknown affiliation, two strata based on census region were formed by combining the Northeast and Midwest into one stratum and the South and West into another.

The next level of stratification was an urbanicity classification based on urban-centric locale, as specified on the PSS. Within a census region-based stratum, urban-centric locale cells that were too small were collapsed. The criterion for adequacy was that the cell had to have an expected school sample size of at least six.

The urbanicity variable was equal to the original urban-centric locale if no collapsing was necessary to cover an inadequate original cell. If collapsing was necessary, the scheme was to first collapse within the four major strata (city, suburbs, town, and rural). For example, if the expected number of large city schools sampled was less than six, large city was collapsed with midsize city. If the collapsed cell was still inadequate, they were further collapsed with small city. If a major stratum cell (all three cells collapsed together) was still deficient, it was collapsed with a neighboring major stratum cell. For example, city would be collapsed with suburbs.

The last stage of stratification was a division of the geographic/urbanicity strata into race/ethnicity strata if the expected number of schools sampled was large enough (i.e., at least equal to 12). This was done by deciding first on the number of race/ethnicity strata and then dividing the geography/urbanicity stratum into that many pieces. The school frame was sorted by the percentage of students in each school who were Black, Hispanic, or American Indian/Alaska Native. The three racial/ethnic groups defining the race/ethnicity strata were those that have historically performed substantially lower on NAEP assessments than White students. The sorted list was then divided into pieces, with roughly an equal expected number of sampled schools in each piece.

Finally, schools were sorted within stratification cells by estimated grade enrollment.

NAEP Technical Documentation Student Sample Selection for the 2022 Eighth- Grade Private School National Assessment in Civics and U.S. History

The sampling of students for the private school assessments in civics and U.S. history at eighth grade involved two steps: (1) sampling of students in the targeted grade (eighth) from each sampled school, and (2) assignment of assessment subject (civics or U.S. history) to the sampled students.

Sampling Students within Sampled Schools

Within each sampled school, a sample of students was selected from a list of students in the targeted grade such that every student had an equal chance of selection. The student lists were submitted either electronically using a system known as E-filing or on paper. In E-filing for private schools, student lists are submitted one school at a time by school coordinators in Excel files. E-filing allows schools to easily submit student demographic data electronically with the student lists, easing the burden on field supervisors and school coordinators.

Schools that are unable to submit their student lists using the E-filing system provide hardcopy lists to NAEP field supervisors. In 2022, most eighth-grade private schools in the national assessment in civics and U.S. history provided hardcopy lists. About 76 percent of the participating schools submitted hardcopy lists while 24 percent of the participating schools E-filed.

In year-round multi-track schools, students in tracks scheduled to be on break on the assessment day were removed from the student lists prior to sampling. (Student base weights were adjusted to account for these students.)

The sampling process was very similar, regardless of list submission type. The sampling process was systematic (e.g., if the sampling rate was one-half, a random starting point of one or two was chosen, and every other student on the list was selected). For E-filed schools only, where demographic data was submitted for every student in the school, students were sorted by gender and race/ethnicity before the sample was selected to implicitly stratify the sample.

In schools with up to 52 students in the targeted grade, all students were selected. In schools with more than 52 students, systematic samples of 50 students were selected. Some students enrolled in the school after the sample was selected. In such cases, new enrollees were sampled at the same rate as the students on the original list.

Assigning Assessment Subject to Sampled Students

Sampled students, including new enrollees, in each participating sampled school were assigned to either the civics or U.S. history assessment at rates of 49 percent and 51 percent, respectively, using a process known as spiraling. In this process, test forms were randomly assigned to sampled students from test form sets that had, on average, a ratio of 26 civics forms to 26 U.S. history forms. Students receiving a civics form were in the civics assessment, and students receiving a U.S. history form were in the U.S. history assessment.

NAEP Technical Documentation Substitute Schools for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

Though efforts were made to secure the participation of all schools selected, it was anticipated that not all schools would choose to participate. NAEP uses school substitution to mitigate the effect of bias due to nonresponse. A nonparticipating sampled school is replaced by its substitute when the original school is considered a final refusal.

For the eighth-grade private school national sample, substitute schools were preselected for all sampled schools with known affiliation from the Private School Universe Survey (PSS)-based frame by sorting the school frame file according to the actual order used in sample selection (the implicit stratification). Sampled schools with unknown affiliation were not assigned substitutes.

Schools were disqualified as potential substitutes if they were already selected in the private school sample or assigned as a substitute for another private school (earlier in the sort ordering).

The two candidates for substitutes were then the two nearest neighbors of the originally sampled school in the frame sort order. To be eligible as a potential substitute, the neighbor needed to be a nonsampled school (for any grade), and within the same explicit sampling stratum and of the same affiliation as the originally sampled school. If both nearest neighbors were eligible to be substitutes, the one with a closer grade enrollment was chosen. If both nearest neighbors had the same grade enrollment (an uncommon occurrence), one of the two was randomly selected.

In the eighth-grade private school sample, seven substitute schools ultimately participated.

NAEP Technical Documentation Target Population for the 2022 Eighth-Grade Private School National Assessment in Civics and U.S. History

The target populations for the 2022 eighth-grade private school national assessment in civics and U.S. history were defined as all eighth-grade students who were enrolled in private schools located within the 50 states and the District of Columbia.

NAEP Technical Documentation 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The eighth-grade public school samples for the national assessments in civics and U.S. history were designed to produce nationally representative samples of students enrolled in grade eight in public schools in the United States. The target sample sizes of assessed students for the eighth-grade public school samples was 14,400 (7,200 per subject). Prior to sampling, the target sample sizes were adjusted upward to offset expected school and student attrition due to nonresponse and ineligibility.

Samples were selected using a two-stage probability-based design that involved selection of schools from within strata and selection of students within schools. The first-stage sample of schools was selected with probability proportional to a measure of size based on estimated grade-specific enrollment in the schools.

The sampling of students at the second-stage involved two steps: (1) sampling of students in the targeted grade (eighth) from each sampled school, and (2) assignment of assessment subject (civics or U.S. history) to the sampled students.

Target Population Sampling Frame Stratification of Schools School Sample Selection Substitute Schools Ineligible Schools Student Sample Selection

School and Student Participation

NAEP Technical Documentation Ineligible Schools for the 2022

Eighth-Grade Public School National Assessment in Civics and U.S. History

The Common Core of Data (CCD)-based public school frame, from which most of the sampled schools were drawn, corresponds to the 2019–2020 school year, two years prior to the assessment school year. During the intervening period, some of these schools either closed, no longer offered the grade of interest, or were ineligible for other reasons. In such cases, the sampled school was coded as ineligible.

Total and Eligible Schools Sampled Eligibility Status of Schools Sampled

NAEP Technical Documentation Eligibility Status of Schools Sampled for the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The following table shows the unweighted counts and percentages of sampled schools that were eligible and ineligible, by reason for ineligibility, for the eighth-grade public school sample for the national assessments in civics and U.S. history.

Sampled public schools, eighth-grade national assessment, by eligibility status: 2022

-

Eligibility status

Unweighted count of schools

Unweighted percentage

All sampled public schools

400

100.00

Eligible

390

96.51

Ineligible

14

3.49

Has sampled grade, but no eligible students

0

0.00

Does not have sampled grade

5

1.25

Closed

3

0.75

Not a regular school

6

1.50

Duplicate on sampling frame

0

0.00

Other ineligible

0

0.00

NOTE: Numbers of schools are rounded to nearest ten, except those pertaining to ineligible schools. Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Total and Eligible Sampled Schools for the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The following table presents unweighted counts and percentages of ineligible and eligible schools by census region in the eighth-grade public school sample for the national assessments in civics and U.S. history.

Eligibility status of sampled public schools, eighth-grade national assessment, by census region: 2022

-

Census region

Eligibility status

Unweighted count

Unweighted percentage

Total

Total

400

100.00

NOTE: Numbers of schools are rounded to nearest ten. Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

Census region Eligibility status Unweighted count Unweighted percentage

-

Ineligible

10

3.49

Eligible

390

96.51

Northeast

Total

60

100.00

Ineligible

0

5.45

Eligible

50

94.55

Midwest

Total

70

100.00

Ineligible

0

1.41

Eligible

70

98.59

South

Total

170

100.00

Ineligible

0

2.35

Eligible

170

97.65

West

Total

110

100.00

Ineligible

10

5.71

Eligible

100

94.29

NOTE: Numbers of schools are rounded to nearest ten. Detail may not sum to total due to rounding. Percentages are based on unrounded counts.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Sampling Frame for the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The primary sampling frame for the 2022 eighth-grade public school samples for the civics and U.S. history assessments was developed from the Common Core of Data (CCD) file corresponding to the 2019–2020 school year. The CCD file is the Department of Education’s primary database of public elementary and secondary schools in the United States including U.S. territories. It includes all regular public, state-operated public, Bureau of Indian Education (BIE), and Department of Defense Education Activity (DoDEA) schools open during the 2019–2020 school year. This eighth-grade sampling frame is referred to as the CCD-based sampling frame.

Eighth-Grade Schools and Enrollment

New-School Sampling Frame

A secondary sampling frame was also created for these samples to account for schools that newly opened or became newly eligible between the 2019–2020 and 2021–2022 school years. This frame contains brand-new and newly-eligible eighth-grade schools and is referred to as the new-school sampling frame.

Both sampling frames excluded ungraded schools, vocational schools with no enrollment, special education-only schools, prison and hospital schools, home school entities, virtual or online schools, adult and evening schools, and juvenile correctional institutions. Vocational schools with no enrollment serve students who split their time between the vocational school and their home school.

NAEP Technical Documentation Eighth-Grade Schools and Enrollment in the 2022 Public School Civics and U.S. History Sampling Frame

The following table presents the number of eighth-grade public schools and its estimated enrollment, as contained in the Common Core of Data (CCD)-based sampling frame, by census region, for the national assessments in civics and U.S. history.

Number of schools and estimated enrollment in CCD-based eighth-grade public school sampling frame, national assessment, by census region: 2022

-

Census region

Schools

Percent

Estimated enrollment

Percent

Total

29,272

100.00

3,844,110

100.00

Northeast

4,538

15.50

587,506

15.28

Midwest

7,843

26.79

789,395

20.54

South

9,594

32.78

1,526,006

39.70

West

7,297

24.93

941,203

24.48

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation New-School Sampling Frame for the 2022 Eighth- Grade Public School National Assessment in Civics and U.S. History

The primary sampling frame for the 2022 eighth-grade public school sample for the national assessments in civics and U.S. history was constructed using the most current Common Core of Data (CCD) file available from NCES. This file contained schools that were in existence during the 2019–2020 school year (i.e., it was two years out of date). During the subsequent 2-year period, undoubtedly some schools closed, some changed structure (one school becoming two schools, for example), some newly opened, and still others changed their grade span.

A supplemental sample was selected from a list of schools that were new or had become newly eligible sometime after the 2019–2020 school year. The goal was to allow every new school a chance of selection, thereby fully covering the target population of schools in operation during the 2021–2022 school year. It was infeasible to ask every school district in the United States to provide a supplemental school frame, so a two-stage procedure was employed. First, a sample of school districts was selected within each state. Then each State or Trial Urban District Assessment (TUDA) Coordinator was sent a list of the schools within their sampled districts that had been present on the 2019–2020 CCD file. The Coordinators were asked to add in any new schools and update grade span for the schools on this list.

The new-school process began with the preparation of a district-level frame. The starting point was a file containing every public school district in the United States. Specific districts were designated as in sample with certainty. They included the following districts:

![]() districts in

jurisdictions where

all schools

were selected

for sample

at either

grade 4

or 8;

districts in

jurisdictions where

all schools

were selected

for sample

at either

grade 4

or 8;

![]() state-operated districts;

state-operated districts;

![]() districts in states with fewer than 10 districts;

districts in states with fewer than 10 districts;

![]() charter-only districts

(that is,

districts containing

no schools

other than

charter schools);

and

charter-only districts

(that is,

districts containing

no schools

other than

charter schools);

and

![]() TUDA districts.

TUDA districts.

Then noncertainty districts were classified as small, medium, or large based on the number of schools and student enrollment of schools from the CCD-based public school frame.

A district was considered to be small if it contained no more than one school at each targeted grade (4 or 8). During school recruitment, the Coordinators were asked to identify schools within their district that newly offered the targeted grade. Every identified new school was added to the sample. From a sampling perspective, the new school was viewed as an “annex” to the sampled school which meant that it had a well-defined probability of selection equal to that of the sampled school. When a school in a small district was sampled from the CCD-based frame, its associated new school was automatically sampled as well.

Within each jurisdiction, districts that were neither certainty selections nor small were divided into two strata, one containing large-size districts and a second containing medium-size districts. These strata were defined by computing the percentage of jurisdiction grade 4 and 8 enrollment represented by each district, sorting in descending order, and cumulating the percentages. All districts up to and including the first district at or above the 80th cumulative percentage were defined as large districts. The remaining districts were defined as medium districts.

A simplified example is given below. The state's districts are ordered by descending percentage enrollment. The first six become large districts and the last six become medium districts.

Large-size and medium-size district strata example, by enrollment, stratum, and district, 2022

-

District

Percentage enrollment

Cumulative percentage enrollment

Stratum

1

20

20

L

2

20

40

L

3

15

55

L

4

10

65

L

5

10

75

L

6

10

85

L

7

5

90

M

8

2

92

M

9

2

94

M

10

2

96

M

District Percentage enrollment Cumulative percentage enrollment Stratum

-

11

2

98

M

12

2

100

M

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Grade 8 Civics and U.S. History Assessments.

The target sample size for each jurisdiction was 10 districts total across the medium-size and large-size district strata. Where possible, eight districts were selected from the large-size district stratum and two districts from the medium-size district stratum. However, in the example above, since there are only six large districts, all of the districts in the large district stratum and four districts from the medium district stratum would have been selected for the new-school inquiry.

If sampling was needed in the medium-size district stratum, districts in this stratum were selected with equal probability. If sampling was needed in the large-size district stratum, the districts in this stratum were sampled with probability proportional to enrollment. These probabilities were retained and used in later stages of sampling and weighting of new schools.

The selected districts in each jurisdiction were then sent a listing of all their schools that appeared on the 2019–2020 CCD file and were asked to provide information about the new schools not included in the file and grade span changes of existing schools. These listings provided by the selected districts were used as sampling frames for selection of new public schools and updates of existing schools. This process was conducted through the NAEP State or TUDA Coordinator in each jurisdiction. The Coordinators were sent the information for all sampled districts in their respective states and were responsible for returning the completed updates.

The following table presents the number and percentage of schools and average estimated grade enrollment for the eighth-grade new-school frame by census region.

Eighth-grade new school frame for the public school national assessment: number and percentage of schools and estimated enrollment, by census region: 2022

-

Census region

Schools

Percentage

Mean school size

Total

340

100.00

57

Northeast

51

15.00

104

Midwest

68

20.00

36

South

159

46.76

51

West

62

18.24

54

NOTE: Detail may not sum to totals because of rounding.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Grade 8 Civics and U.S. History Assessments.

NAEP Technical Documentation School and Student Participation in the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The tables linked to the right present weighted school and student participation rates, student exclusion rates, and student full-time remote rates for the eighth-grade public school national civics and U.S. history samples.

A weighted school participation rate indicates the percentage of the student population that is directly represented by the participating school sample.

A weighted student participation rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools.

A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. Students

Weighted School Response Rates

Weighted Student Response and Exclusion Rates for Civics

Weighted Student Response and Exclusion Rates for U.S. History

are generally excluded from a NAEP assessment if they have a disability or limited English language proficiency that prevents them from taking the assessment altogether or the accommodations they require to take the assessment were unavailable.

A weighted full-time remote rate indicates the percentage of the student population that is full-time remote.

Weighted school participation rates are calculated by dividing the sum of school base weights, weighted by student enrollment of the targeted grade, for all participating schools by the sum of the base weights, weighted by student enrollment of the target grade, for all eligible schools. Eligible schools are all sampled schools except those considered out- of-scope. The base weight is assigned to all sampled schools and is the inverse of the probability of selection. The weighted school participation rates in these tables reflect participation prior to substitution. That is, participating substitute schools that took the place of refusing originally sampled schools are not included in the numerator.

Weighted student participation rates are calculated by dividing the sum of the student base weights for all assessed students by the sum of the student base weights for all assessable students. (See below for the response dispositions of NAEP sampled students.) Students deemed assessable are those who were assessed or absent. They do not include students that were not eligible (primarily made up of withdrawn or graduated students) or students with disabilities (SD) or English learners (EL) who were excluded from the assessment.

Weighted student exclusion rates are calculated by dividing the sum of the school nonresponse-adjusted student base weights for all excluded students by the sum for all assessable and excluded students.

Weighted student full-time remote rates are calculated by dividing the sum of the school nonresponse-adjusted student base weights for all full-time remote students by the sum for all assessable, excluded, and full-time remote students.

Every student

sampled for

NAEP is

classified into

one of

the following

response disposition

categories:

![]() Assessed

Assessed

![]() Absent

Absent

![]() Excluded (must

be SD,

EL, or

SD and

EL)

Excluded (must

be SD,

EL, or

SD and

EL)

![]() Withdrawn or Graduated (ineligible)

Withdrawn or Graduated (ineligible)

![]() Full-time remote

Full-time remote

Assessed students were students that completed an assessment.

Absent students were students who were eligible to take an assessment but were absent from the initial session and the makeup session if one was offered. (Note, some schools, not all, had make-up sessions for students who were absent from the initial session.)

Excluded students were determined by their school to be unable to meaningfully take the NAEP assessment in their assigned subject, even with an accommodation. Excluded students must also be classified as SD and/or EL.

Withdrawn or graduated students are those who have left the school before the original assessment. These students are considered ineligible for NAEP. Full-time remote students are enrolled in brick-and-mortar schools but do not attend school in person. They are considered not assessable for NAEP.

NAEP Technical Documentation Weighted School Response Rates for the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

The following table presents unweighted counts of eligible sampled and participating schools and weighted school response rates, by census region, for the eighth-grade public school national civics and U.S. history samples.

A weighted school response rate indicates the percentage of the student population that is directly represented by the participating school sample. These response rates are based on the original sample of schools (excluding substitutes).

Eligible and participating school counts and weighted school response rates for eighth-grade public schools, national civics and U.S. history assessments, by census region: 2022

-

Census region

Number of eligible sampled schools

Number of participating schools

Weighted school response rate (percent)

National

390

360

91.00

Northeast

50

50

87.13

Midwest

70

70

91.59

South

170

160

96.50

West

100

80

83.29

NOTE: Numbers of schools are rounded to nearest ten. Detail may not sum to totals due to rounding.

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 National Civics and U.S. History Assessments.

NAEP Technical Documentation Weighted Student Response and Exclusion Rates for the 2022 Eighth-Grade Public School National Civics Assessment

The following table presents weighted student response, exclusion, and full-time remote rates, by census region, for eighth-grade public school students in the national civics sample. Separate exclusion rates are provided for students with disabilities (SD) and English learners (EL).

A weighted student response rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools. A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. The weighted student full-time remote rate indicates the percentage of the student population that is full-time remote (enrolled in brick-and-mortar schools but do not attend school in person).

Weighted student response, exclusion, and full-time remote rates for eighth-grade public schools, national civics assessment, by census region: 2022

-

Census region

Weighted student response

rates (percent)

Weighted percentage of all students who were SD and excluded

Weighted percentage of all students who were EL and excluded

Weighted student full-time remote rates (percent)

National

89.96

1.16

0.60

1.30

Northeast

87.98

0.95

0.61

0.36

Midwest

91.13

1.04

0.49

0.77

South

90.52

1.21

0.48

1.54

West

88.98

1.31

0.90

1.97

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 Civics Assessment.

NAEP Technical Documentation Weighted Student Response and Exclusion Rates for the 2022 Eighth-Grade Public School National U.S. History Assessment

The following table presents weighted student response, exclusion, and full-time remote rates, by census region, for eighth-grade public school students in the national U.S. history sample. Separate exclusion rates are provided for students with disabilities (SD) and English learners (EL).

A weighted student response rate indicates the percentage of the student population that is directly represented by the assessed students from within participating schools. A weighted exclusion rate indicates the percentage of students in the population that would be excluded from the assessment. The weighted student full-time remote rate indicates the percentage of the student population that is full-time remote (enrolled in brick-and-mortar schools but do not attend school in person).

Weighted student response, exclusion, and full-time remote rates for eighth-grade public schools, national U.S. history assessment, by census region: 2022

-

Census region

Weighted student response

rates (percent)

Weighted percentage of all students who were SD and excluded

Weighted percentage of all students who were EL and excluded

Weighted student full-time remote rates (percent)

National

89.58

1.42

0.51

1.09

Northeast

88.37

1.33

0.58

0.64

Midwest

90.85

0.98

0.40

0.86

South

89.70

1.54

0.52

1.08

West

88.87

1.68

0.56

1.63

SOURCE: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress (NAEP), 2022 U.S. History Assessment.

NAEP Technical Documentation School Sample Selection for the 2022 Eighth- Grade Public School National Assessment in Civics and U.S. History

The sampled schools for the eighth-grade public school national assessments in civics and U.S. history came from two frames: the primary public school sample frame constructed from the Common Core of Data (CCD) and the supplemental new-school sample frame. Schools were sampled from each school frame with probability proportional to size using systematic sampling. Prior to sampling, schools in each frame were sorted by the appropriate implicit stratification variables in a serpentine order. (For details on the implicit stratification variables used for these samples see the stratification page.) A school's measure of size was a complex function of the school's estimated grade enrollment. Only one hit was allowed for each school.

Computation of Measures of Size

School Sample Sizes: CCD-Based and New School

Schools from the CCD-based frame were sampled at a rate that would yield a national sample of 14,400 assessed students across both subjects. Schools from the new-school frame were sampled at the same rate as those from the CCD-based frame.

NAEP Technical Documentation Computation of Measures of Size for the 2022 Eighth-Grade Public School National Assessment in Civics and U.S. History

In designing the eighth-grade public school civics and U.S. history assessment samples, six objectives underlie the process of determining the probability of selection for each school and the number of students to be sampled from each selected school:

![]() to meet the overall target student sample size;

to meet the overall target student sample size;

![]() to select

an equal-probability

sample of

students from

each explicit

sampling stratum;

to select

an equal-probability

sample of

students from

each explicit

sampling stratum;

![]() to limit the number of students selected from any one school;

to limit the number of students selected from any one school;

![]() to ensure

that the

sample within

a school

does not

include a

very high

percentage of

the students

in the

school, unless

all students

are included;

to ensure

that the

sample within

a school

does not

include a

very high

percentage of

the students

in the

school, unless

all students

are included;

![]() to reduce

the sampling

rate of

small schools,

in recognition

of the

greater cost

and burden

per student

of conducting

assessments in

such schools;

and

to reduce

the sampling

rate of

small schools,

in recognition

of the

greater cost

and burden

per student

of conducting

assessments in

such schools;

and

![]() to increase the number of Black, Hispanic, and

American Indian/Alaska Native (AI/AN) students in the sample.

to increase the number of Black, Hispanic, and

American Indian/Alaska Native (AI/AN) students in the sample.

The goal in determining the school's measure of size (MOS) is to optimize across the middle four objectives in terms of maintaining the precision of estimates and the cost effectiveness of the sample design.